Lasso算法...

回归分析統計方法

统计学机器学习特征选择正则化回归分析统计模型斯坦福大学Leo Breiman非负参数推断最小二乘法估计量估计量岭回归估计值软阈值协变量共线线性回归广义线性模型广义估计方程成比例灾难模型M-估计几何学贝叶斯统计凸分析基追踪降噪Robert Tibshirani移步选择岭回归缩小回归系数岭回归协方差矩阵ℓp{displaystyle ell ^{p}}范数orthonormalinner productKronecker deltasubgradient methodsindependent

| 当前條目的内容正在依照其他语言维基百科的内容进行翻译。(2018年3月20日) 如果您熟知條目内容并擅长翻译,欢迎协助改善或校对此條目,长期闲置的非中文内容可能会被移除。 |

机器学习与数据挖掘 |

|---|

|

问题 |

|

監督式學習 (分类 · 回归) |

|

聚类 |

|

降维 |

|

结构预测 |

|

异常检测 |

|

神经网络 |

|

强化学习 |

|

理论 |

|

在统计学和机器学习中,Lasso算法(英语:least absolute shrinkage and selection operator,又译最小绝对值收敛和选择算子、套索算法)是一种同时进行特征选择和正则化(数学)的回归分析方法,旨在增强统计模型的预测准确性和可解释性,最初由斯坦福大学统计学教授Robert Tibshirani于1996年基于Leo Breiman的非负参数推断(Nonnegative Garrote, NNG)提出[1][2]。Lasso算法最初用于计算最小二乘法模型,这个简单的算法揭示了很多估计量的重要性质,如估计量与岭回归(Ridge regression,也叫Tikhonov regularization)和最佳子集选择的关系,Lasso系数估计值(estimate)和软阈值(soft thresholding)之间的联系。它也揭示了当协变量共线时,Lasso系数估计值不一定唯一(类似标准线性回归)。

虽然最早是为应用最小二乘法而定义的算法,lasso正则化可以简单直接地拓展应用于许多统计学模型上,包括广义线性模型,广义估计方程,成比例灾难模型和M-估计[3][4]。Lasso选择子集的能力依赖于限制条件的形式并且有多种表现形式,包括几何学,贝叶斯统计,和凸分析。

Lasso算法与基追踪降噪联系紧密。

目录

1 历史来源

2 基本形式

2.1 Orthonormal covariates

2.2 Correlated covariates

3 一般形式

4 算法解释

5 参见

6 参考文献

历史来源

Robert Tibshirani最初使用Lasso来提高预测的准确性与回归模型的可解释性,他修改了模型拟合的过程,在协变量中只选择一个子集应用到最终模型中,而非用上全部协变量。这是基于有着相似目的,但方法有所不同的Breiman的非负参数推断。

在Lasso之前,选择模型中协变量最常用的方法是移步选择,这种方法在某些情况下是准确的,例如一些协变量与模型输出值有强相关性情况。然而在另一些情况下,这种方法会让预测结果更差。在当时,岭回归是提高模型预测准确性最常用的方法。岭回归可以通过缩小大的回归系数来减少过拟合从而改善模型预测偏差。但是它并不选择协变量,所以对模型的准确构建和解释没有帮助。

Lasso结合了上述的两种方法,它通过强制让回归系数绝对值之和小于某固定值,即强制一些回归系数变为0,有效地选择了不包括这些回归系数对应的协变量的更简单的模型。这种方法和岭回归类似,在岭回归中,回归系数平方和被强制小于某定值,不同点在于岭回归只改变系数的值,而不把任何值设为0。

基本形式

Lasso最初为了最小二乘法而被设计出来,Lasso的最小二乘法应用能够简单明了地展示Lasso的许多特性。

假设一个样本包括N种事件,每个事件包括p个协变量和一个输出值。让yi{displaystyle y_{i}}为输出值,并且xi:=(x1,x2,…,xp)T{displaystyle x_{i}:=(x_{1},x_{2},ldots ,x_{p})^{T}}为第i种情况的协变量向量,那么Lasso要计算的目标方程就是:

对所有 ∑j=1p|βj|≤t{displaystyle sum _{j=1}^{p}|beta _{j}|leq t},计算 minβ0,β{1N∑i=1N(yi−β0−xiTβ)2}{displaystyle min _{beta _{0},beta }left{{frac {1}{N}}sum _{i=1}^{N}(y_{i}-beta _{0}-x_{i}^{T}beta )^{2}right}}[1]

这里 t{displaystyle t} 是一个决定规则化程度的预定的自由参数。 设X{displaystyle X}为协方差矩阵,那么 Xij=(xi)j{displaystyle X_{ij}=(x_{i})_{j}},其中 xiT{displaystyle x_{i}^{T}} 是 X{displaystyle X}的第 i 行,那么上式可以写成更紧凑的形式:

- 对所有 ‖β‖1≤t{displaystyle |beta |_{1}leq t},计算 minβ0,β{1N‖y−β0−Xβ‖22}{displaystyle min _{beta _{0},beta }left{{frac {1}{N}}left|y-beta _{0}-Xbeta right|_{2}^{2}right}}

这里 ‖β‖p=(∑i=1N|βi|p)1/p{displaystyle |beta |_{p}=left(sum _{i=1}^{N}|beta _{i}|^{p}right)^{1/p}} 是标准 ℓp{displaystyle ell ^{p}} 范数,1N{displaystyle 1_{N}}是N×1{displaystyle Ntimes 1}维的1的向量。

因为 β^0=y¯−x¯Tβ{displaystyle {hat {beta }}_{0}={bar {y}}-{bar {x}}^{T}beta },所以有

- yi−β^0−xiTβ=yi−(y¯−x¯Tβ)−xiTβ=(yi−y¯)−(xi−x¯)Tβ,{displaystyle y_{i}-{hat {beta }}_{0}-x_{i}^{T}beta =y_{i}-({bar {y}}-{bar {x}}^{T}beta )-x_{i}^{T}beta =(y_{i}-{bar {y}})-(x_{i}-{bar {x}})^{T}beta ,}

对变量进行中心化是常用的数据处理方法。并且协方差一般规范化为 (∑i=1Nxij2=1){displaystyle textstyle left(sum _{i=1}^{N}x_{ij}^{2}=1right)} ,这样得到的解就不会依赖测量的规模。

它的目标方程还可以写为:

- minβ∈Rp{1N‖y−Xβ‖22} subject to ‖β‖1≤t.{displaystyle min _{beta in mathbb {R} ^{p}}left{{frac {1}{N}}left|y-Xbeta right|_{2}^{2}right}{text{ subject to }}|beta |_{1}leq t.}

in the so-called Lagrangian form

- minβ∈Rp{1N‖y−Xβ‖22+λ‖β‖1}{displaystyle min _{beta in mathbb {R} ^{p}}left{{frac {1}{N}}left|y-Xbeta right|_{2}^{2}+lambda |beta |_{1}right}}

where the exact relationship between t{displaystyle t} and λ{displaystyle lambda } is data dependent.

Orthonormal covariates

Some basic properties of the lasso estimator can now be considered.

Assuming first that the covariates are orthonormal so that (xi∣xj)=δij{displaystyle (x_{i}mid x_{j})=delta _{ij}}, where (⋅∣⋅){displaystyle (cdot mid cdot )} is the inner product and δij{displaystyle delta _{ij}} is the Kronecker delta, or, equivalently, XTX=I{displaystyle X^{T}X=I}, then using subgradient methods it can be shown that

β^j=SNλ(β^jOLS)=β^jOLSmax(0,1−Nλ|β^jOLS|) where β^OLS=(XTX)−1XTy{displaystyle {begin{aligned}{hat {beta }}_{j}={}&S_{Nlambda }({hat {beta }}_{j}^{text{OLS}})={hat {beta }}_{j}^{text{OLS}}max left(0,1-{frac {Nlambda }{|{hat {beta }}_{j}^{text{OLS}}|}}right)\&{text{ where }}{hat {beta }}^{text{OLS}}=(X^{T}X)^{-1}X^{T}yend{aligned}}} [1]

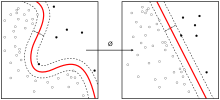

Sα{displaystyle S_{alpha }} is referred to as the soft thresholding operator, since it translates values towards zero (making them exactly zero if they are small enough) instead of setting smaller values to zero and leaving larger ones untouched as the hard thresholding operator, often denoted Hα{displaystyle H_{alpha }}, would.

This can be compared to ridge regression, where the objective is to minimize

- minβ∈Rp{1N‖y−Xβ‖22+λ‖β‖22}{displaystyle min _{beta in mathbb {R} ^{p}}left{{frac {1}{N}}|y-Xbeta |_{2}^{2}+lambda |beta |_{2}^{2}right}}

yielding

- β^j=(1+Nλ)−1β^jOLS.{displaystyle {hat {beta }}_{j}=(1+Nlambda )^{-1}{hat {beta }}_{j}^{text{OLS}}.}

So ridge regression shrinks all coefficients by a uniform factor of (1+Nλ)−1{displaystyle (1+Nlambda )^{-1}} and does not set any coefficients to zero.

It can also be compared to regression with best subset selection, in which the goal is to minimize

- minβ∈Rp{1N‖y−Xβ‖22+λ‖β‖0}{displaystyle min _{beta in mathbb {R} ^{p}}left{{frac {1}{N}}left|y-Xbeta right|_{2}^{2}+lambda |beta |_{0}right}}

where ‖⋅‖0{displaystyle |cdot |_{0}} is the "ℓ0{displaystyle ell ^{0}} norm", which is defined as ‖z‖=m{displaystyle |z|=m} if exactly m components of z are nonzero. In this case, it can be shown that

- β^j=HNλ(β^jOLS)=β^jOLSI(|β^jOLS|≥Nλ){displaystyle {hat {beta }}_{j}=H_{sqrt {Nlambda }}left({hat {beta }}_{j}^{text{OLS}}right)={hat {beta }}_{j}^{text{OLS}}mathrm {I} left(left|{hat {beta }}_{j}^{text{OLS}}right|geq {sqrt {Nlambda }}right)}

where Hα{displaystyle H_{alpha }} is the so-called hard thresholding function and I{displaystyle mathrm {I} } is an indicator function (it is 1 if its argument is true and 0 otherwise).

Therefore, the lasso estimates share features of the estimates from both ridge and best subset selection regression since they both shrink the magnitude of all the coefficients, like ridge regression, but also set some of them to zero, as in the best subset selection case. Additionally, while ridge regression scales all of the coefficients by a constant factor, lasso instead translates the coefficients towards zero by a constant value and sets them to zero if they reach it.

Returning to the general case, in which the different covariates may not be independent, a special case may be considered in which two of the covariates, say j and k, are identical for each case, so that x(j)=x(k){displaystyle x_{(j)}=x_{(k)}}, where x(j),i=xij{displaystyle x_{(j),i}=x_{ij}}. Then the values of βj{displaystyle beta _{j}} and βk{displaystyle beta _{k}} that minimize the lasso objective function are not uniquely determined. In fact, if there is some solution β^{displaystyle {hat {beta }}} in which β^jβ^k≥0{displaystyle {hat {beta }}_{j}{hat {beta }}_{k}geq 0}, then if s∈[0,1]{displaystyle sin [0,1]} replacing β^j{displaystyle {hat {beta }}_{j}} by s(β^j+β^k){displaystyle s({hat {beta }}_{j}+{hat {beta }}_{k})} and β^k{displaystyle {hat {beta }}_{k}} by (1−s)(β^j+β^k){displaystyle (1-s)({hat {beta }}_{j}+{hat {beta }}_{k})}, while keeping all the other β^i{displaystyle {hat {beta }}_{i}} fixed, gives a new solution, so the lasso objective function then has a continuum of valid minimizers.[5] Several variants of the lasso, including the Elastic Net, have been designed to address this shortcoming, which are discussed below.

一般形式

算法解释

参见

- 降维

- 特征选择

参考文献

^ 1.01.11.2 Tibshirani, Robert. 1996. “Regression Shrinkage and Selection via the lasso”. Journal of the Royal Statistical Society. Series B (methodological) 58 (1). Wiley: 267–88. http://www.jstor.org/stable/2346178.

^ Breiman, Leo. Better Subset Regression Using the Nonnegative Garrote. Technometrics. 1995-11-01, 37 (4): 373–384. ISSN 0040-1706. doi:10.2307/1269730.

^ Tibshirani, Robert. Regression Shrinkage and Selection via the Lasso. Journal of the Royal Statistical Society. Series B (Methodological). 1996, 58 (1): 267–288.

^ Tibshirani, Robert. The Lasso Method for Variable Selection in the Cox Model. Statistics in Medicine. 1997-02-28, 16 (4): 385–395. ISSN 1097-0258. doi:10.1002/(sici)1097-0258(19970228)16:4%3C385::aid-sim380%3E3.0.co;2-3 (英语). [永久失效連結]

^ 引用错误:没有为名为Zou 2005的参考文献提供内容

![{displaystyle sin [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aff1a54fbbee4a2677039524a5139e952fa86eb9)